If you watched the Obi-Wan Kenobi series back in 2022 and thought, "Wow, James Earl Jones still sounds exactly like he did in 1977," you weren't alone. It was uncanny. That booming, resonant bass felt like it had been pulled straight out of a time capsule from A New Hope. But here is the thing: it basically was.

James Earl Jones didn't actually record those lines in a studio. Not in the traditional way. At 91 years old, the legendary actor had decided it was time to let the character move on without him. He was ready to retire the Sith Lord's vocal cords. But rather than just recast the most iconic villain in cinematic history, Lucasfilm did something that felt a bit like science fiction. They used a Darth Vader AI voice.

Honestly, the way it happened is a mix of high-tech wizardry and a very human respect for a legacy. It wasn't just a computer spitting out robotic syllables. It was a partnership between an aging icon and a tiny startup in a war zone.

How the Darth Vader AI Voice Actually Works

The tech behind the curtain is a company called Respeecher. They’re based in Kyiv, Ukraine. While Russian tanks were literally rolling toward the city, these sound engineers were hunkered down, perfecting the "Imperial March" of vocal frequencies. It sounds dramatic because it was.

Most people assume AI voices are just text-to-speech, like a fancy version of Siri. That’s not what happened here. Respeecher uses something called "speech-to-speech" synthesis. Basically, a human performer—someone who can nail the cadence and emotion of a scene—records the lines first. Then, the AI acts as a digital skin. It takes that performance and wraps it in the specific "timbre" of a young James Earl Jones.

It’s the difference between a puppet and an actor in a costume. The AI provided the "costume" (the 1977-era voice), but it still needed a human heart to beat.

Why James Earl Jones Signed Over His Rights

You might wonder why an actor would give away the rights to their voice forever. For Jones, it wasn't about the money—though I’m sure the check was healthy. He’s always been famously humble about the role. For years, he didn't even want his name in the credits because he felt David Prowse, the man in the suit, was the "real" actor.

To him, the voice was a special effect.

By signing over his archival recordings, he ensured that Vader would never sound "off." We’ve all seen those sequels where a character returns but sounds like a cheap imitation. Jones didn't want that for Star Wars. He wanted the character to be immortal. He acted as a "benevolent godfather" during the process, listening to the AI's output and giving his blessing.

The "Uncanny Valley" and Fan Reaction

When Rogue One came out in 2016, we heard the real James Earl Jones, but his voice had aged. It was still powerful, but you could hear the 80-something-year-old man behind the mask. It was a bit softer, a bit more labored.

Then came Obi-Wan Kenobi.

Suddenly, Vader was terrifying again. He sounded aggressive. He sounded vital. Most fans didn't even realize they were listening to a Darth Vader AI voice until the credits rolled or the Vanity Fair articles started dropping. It was a rare moment where AI didn't feel like a soulless replacement, but a restoration of a masterpiece.

Is This the Future for All Actors?

Not everyone is happy about it. The 2023 SAG-AFTRA strikes were largely fueled by the fear of exactly this. Actors are worried that studios will just buy a "voice license" once and never hire a human again. If Disney can use an AI version of a legend, why would they hire a new voice actor who needs a lunch break and a pension?

✨ Don't miss: Janos Slynt Game of Thrones: The Character We All Loved to Hate

It's a valid fear. But the Vader case is unique because it had the actor's explicit consent. Compare that to the legal mess involving Peter Cushing’s estate over his digital resurrection in Rogue One. That turned into a massive lawsuit.

The difference here is transparency. Jones wanted this.

The Technical Breakdown

If you're a bit of a nerd for how this stuff is built, here is the simplified version of the Respeecher process:

- The Dataset: They fed the AI thousands of clips of James Earl Jones from the original trilogy.

- The Training: The neural network learned the specific way his throat vibrated and how he hit his "plosives" (those hard 'P' and 'B' sounds).

- The Source Performance: An actor (likely someone like Matthew Wood or a specialized voice artist) performed the new lines with the right emotion.

- The Conversion: The AI swapped the "source" voice with the "target" (Vader) voice while keeping the original actor's timing.

It’s remarkably efficient. It also allows for "ADR" (automated dialogue replacement) to happen much faster. If a director wants to change one word in a scene, they don't have to fly a 90-year-old man to a studio. They just re-run the algorithm.

Ethical Concerns and the "Ghost" in the Machine

We have to talk about the "ghoul factor." Some people find it creepy. There is something inherently ghostly about a voice continuing to speak after an actor has passed or retired. As of 2026, we’re seeing more of this—not just in Star Wars, but in video games and documentaries.

There is a risk of "vocal stagnation." If we keep using the same five iconic voices forever, do we lose the chance to find the next James Earl Jones? Probably. But for a character like Vader, who is literally a man trapped in a machine, the use of a Darth Vader AI voice feels weirdly poetic.

It fits the lore.

What You Can Do Now

If you are interested in how AI is changing media, or if you're a creator looking to use these tools, here is how to navigate the current landscape:

- Check the Credits: Start looking for "Respeecher" or "Synthetic Voice" in the credits of your favorite shows. You'll be surprised how often it's popping up now.

- Explore the Ethics: Read up on the No Fakes Act and recent SAG-AFTRA agreements. Understanding the "Right of Publicity" is going to be huge as more actors "immortalize" their likenesses.

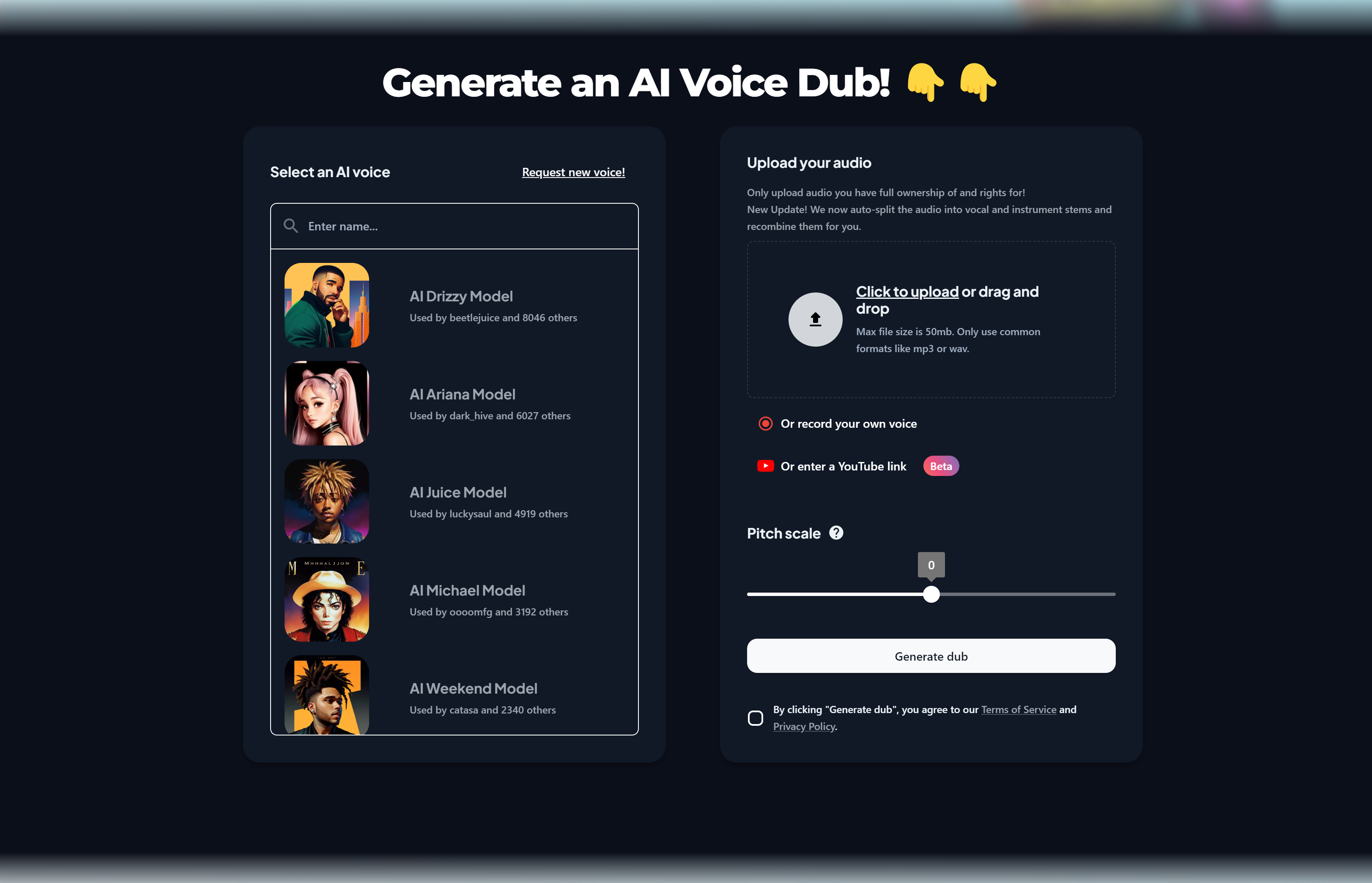

- Try the Tech: You can actually experiment with high-end voice cloning (ethically!) through platforms like ElevenLabs or Respeecher's own small-business tools. Just remember: using a celebrity's voice without permission is a quick way to get a "cease and desist."

- Support Original Voice Talent: While AI is great for legacy characters, new stories need new voices. Follow voice actors on social media to see how they are fighting for "human-in-the-loop" requirements in their contracts.

The era of the "forever actor" is here. Whether that’s a "New Hope" or a "Phantom Menace" depends entirely on how we use the tech.

Stay curious about the tech, but keep your ears open for the human behind the mask. That's where the real magic still lives.