Honestly, the AI world is a bit obsessed with "bigger is better." We've seen models with trillions of parameters that require a small power plant just to answer a simple question about a recipe for sourdough. But back in early 2023, Meta quietly dropped a paper that basically flipped the script. They introduced llama: open and efficient foundation language models, and it started a ripple effect that changed how developers actually build things. Instead of chasing massive size, they focused on making smaller models smarter by feeding them a ridiculous amount of high-quality data.

It worked.

💡 You might also like: How Can I Stream Live TV For Free Without Getting Scammed

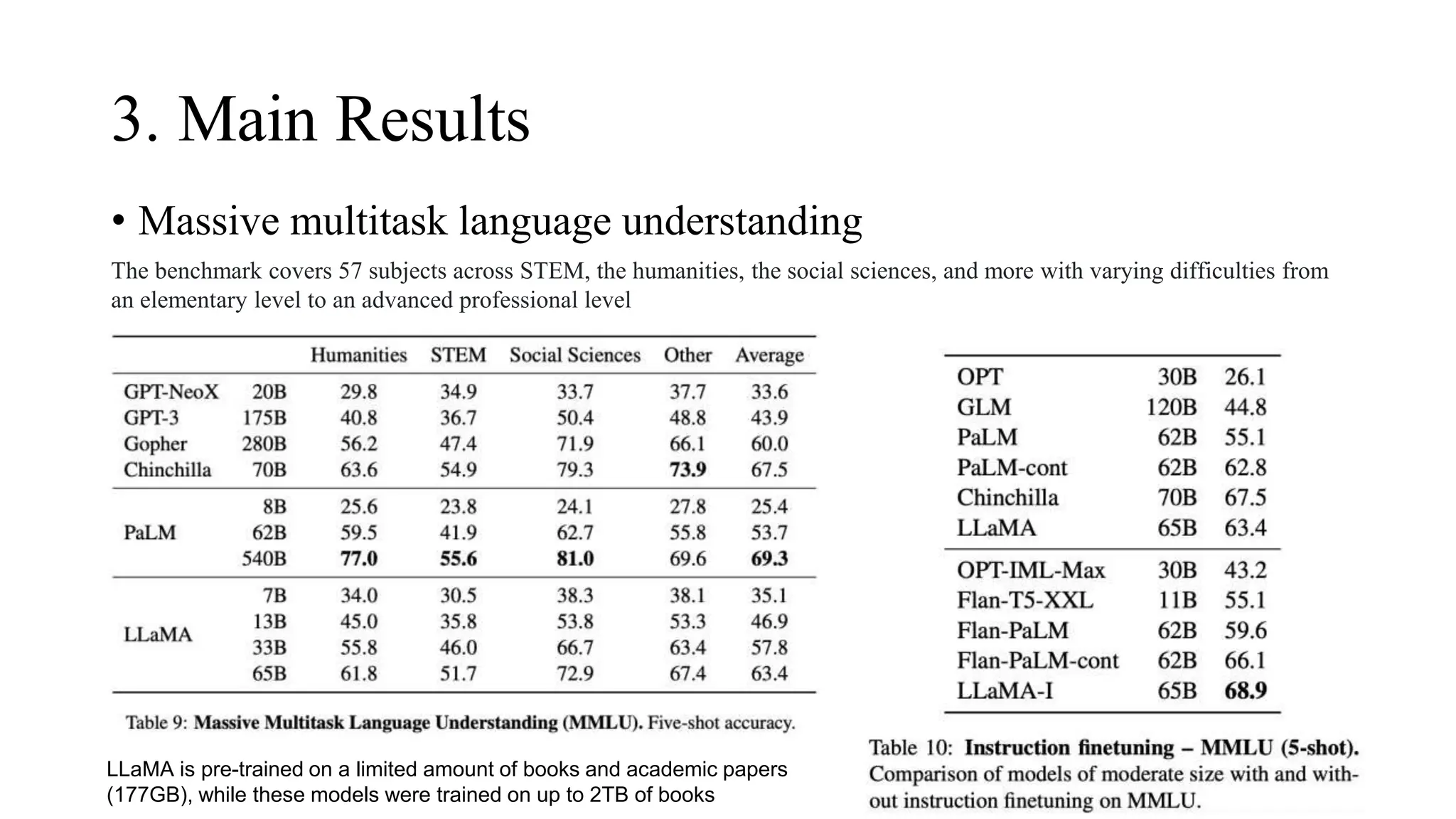

The original Llama-13B model actually outperformed the massive GPT-3 on several benchmarks, despite being ten times smaller. That's not just a technical win; it’s a budget win. If you've ever tried to host a massive LLM on your own servers, you know the "VRAM panic" all too well. Llama proved you don't need a supercomputer to get state-of-the-art results. You just need a model that was trained the right way.

The Secret Sauce of the Llama Architecture

Why is Llama so much faster than the old-school transformers? It’s not one single "aha!" moment. It’s a bunch of smart engineering choices. Meta took the standard transformer and gave it a tune-up. They used RMSNorm for pre-normalization, which keeps the training stable even when the model gets deep. Then they swapped out the standard ReLU activation for SwiGLU. It sounds like a character from a cartoon, but it’s actually a math trick that helps the model learn more complex patterns with less effort.

Another big one is Rotary Positional Embeddings (RoPE). Most models use absolute positioning—basically telling the model "this is the first word, this is the second word." RoPE is more flexible. It helps the model understand how words relate to each other regardless of where they are in the sequence.

Fast forward to 2026, and we're seeing these same principles scaled into the Llama 3 and 4 series. The introduction of Grouped-Query Attention (GQA) was a game-changer for inference speed. Basically, it lets the model share information across different "heads" during processing, which slashes the memory bandwidth needed. This is why you can now run a surprisingly capable version of Llama on a decent laptop or even a high-end phone without it turning into a literal space heater.

Why "Open" Matters More Than You Think

When people talk about llama: open and efficient foundation language models, they often get hung up on the "open" part. Is it truly open source? Technically, it’s "open weights." You can download the model, see exactly how it's built, and run it locally. You aren't stuck paying a "per-token" tax to a big tech company every time your app makes a request.

This transparency is huge for privacy. If you’re a healthcare company or a law firm, you can’t exactly send sensitive client data into a black-box cloud API. With Llama, you keep the data on your own hardware.

Performance vs. Efficiency: The Real Trade-off

| Model Version | Parameters | Context Window | Key Strength |

|---|---|---|---|

| Llama 1 (65B) | 65 Billion | 2,048 tokens | Proved small models could beat GPT-3 |

| Llama 2 (70B) | 70 Billion | 4,096 tokens | Better safety and commercial licensing |

| Llama 3.1 (405B) | 405 Billion | 128,000 tokens | Frontier-level reasoning at scale |

| Llama 3.2 (3B) | 3 Billion | 128,000 tokens | Optimized for mobile and edge devices |

| Llama 4 Scout | 109 Billion | 10M tokens | Massive context and native multimodality |

Looking at that jump to Llama 4 Scout—which just hit the scene—the efficiency gains are staggering. We went from struggling with 2,000 tokens to handling 10 million. That's the difference between reading a paragraph and "reading" fifty novels at once.

Llama 4 and the Shift to Mixture-of-Experts

The latest buzz is all about Mixture-of-Experts (MoE) architecture. Instead of activating every single neuron for every single word (which is what Llama 1 through 3 did), Llama 4 uses a "router." If you ask a question about Python code, the router only turns on the "expert" parts of the brain that know about coding.

It’s like having a team of 128 specialists but only paying the two who are actually working on your specific problem. This is how the 400B parameter Llama 4 Maverick manages to be fast. It only uses about 17 billion active parameters at any given moment. It’s basically a massive library with a very fast librarian.

What Most People Get Wrong About Llama

A lot of folks think that because Llama is "open," it's automatically worse than the "closed" models like GPT-4o or Gemini. That’s just not true anymore. In 2026, the gap has basically vanished for 90% of use cases. Unless you need a very specific, ultra-rare reasoning capability, Llama is often the better choice because you can fine-tune it.

Fine-tuning is the "superpower" of the Llama ecosystem. You can take a base Llama model and feed it your company’s internal documentation, your specific coding style, or your brand’s unique voice. You can't do that effectively with a closed API. You’re basically renting a brain versus owning one and teaching it exactly what you want it to know.

The Problem with Hallucinations

Let's be real: Llama isn't perfect. Like all LLMs, it can still hallucinate. If you ask it about a very niche legal case from 1924 that doesn't exist, it might confidently invent one. Meta has improved this with Reinforcement Learning from Human Feedback (RLHF) and better safety guardrails like Llama Guard, but you still need to verify the output for high-stakes work.

How to Actually Start Using Llama Today

If you're a developer or a tech-curious business owner, you don't need to wait for a salesperson to call you. You can grab these models right now.

- Check out Ollama: This is the easiest way to run Llama on your local machine. It’s basically a one-click installer that handles all the messy backend stuff.

- Browse Hugging Face: This is the "GitHub of AI." You can find thousands of fine-tuned versions of Llama here. Want a version that speaks only in 18th-century pirate slang? Someone has probably built it.

- Use Llama.cpp: If you want to run these models on "potato" hardware (older computers or tiny servers), this C++ implementation is incredibly efficient.

The reality of 2026 is that AI is becoming a commodity. The "moat" isn't the model itself anymore; it's how you use it. By leveraging llama: open and efficient foundation language models, you're choosing a path that offers more control, lower costs, and way more flexibility than the walled gardens of the past.

If you're ready to move beyond just chatting with a bot and want to actually build something, start by looking at your hardware. Determine your VRAM limits first. If you've got 24GB of VRAM (like an RTX 3090 or 4090), you can comfortably run the 8B or even 70B quantized models. From there, it's just a matter of choosing the right "expert" version for your specific task and integrating it into your workflow.